In this OpenAI community topic, the inquirer is concerned about a Google sheet requiring an AI treatment and inferencing costs.

My Google Sheet has 3000 rows of companies description (approximately 100 words each).

I have a script in Google Sheet to link it with GPT3.5 Turbo via API.I want to use the GPT3 to :

rewrite description in professional terms

provide 7 keywords per description.

All in all, each description should represent approximately an input of 100 words and output of 100 + 7 words, eg. 207 words ~/~ tokens.

I made a quick test on 5 descriptions, and it costs me 2.5$.

This seems very expensive compared to the price list of openai, doesn’t it?

Yes, it does seem expensive. A half-buck for a summary is not likely what really happened in his five-row test.

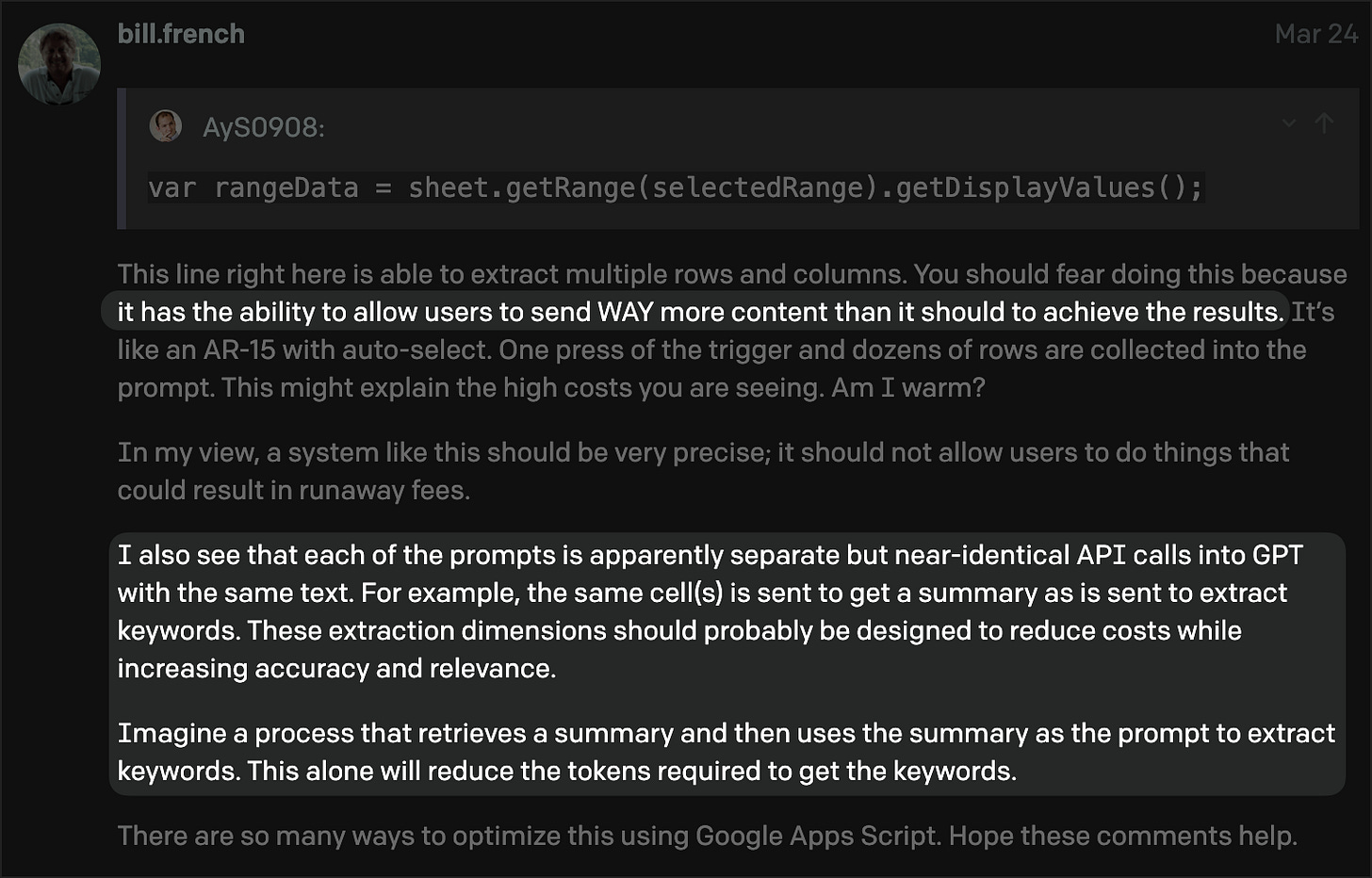

The problem was obvious following a quick glance at the Google Apps Script driving this process. The first highlighted passage is the mistake that burns tokens.

The second passage is a strategy to cut costs in half. One of the incredible superpowers of AI is its ability to cascade inferencing efforts. By using the summary to extract keywords, you are essentially caching forward previous token costs to lessen future token costs.

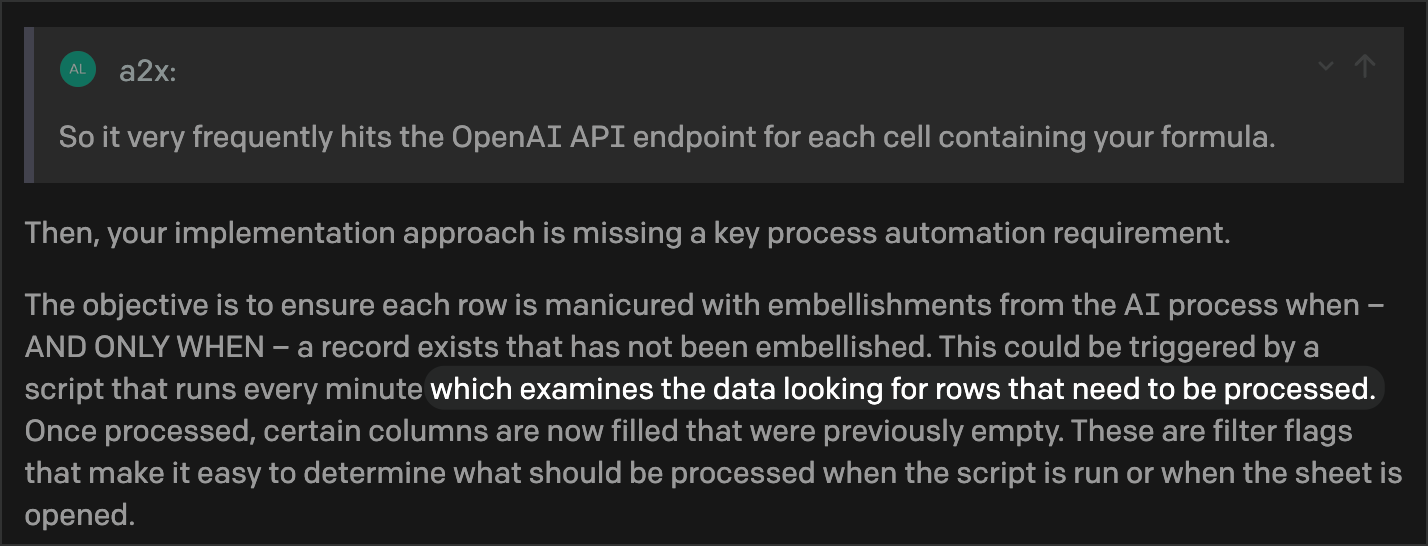

Lastly, ensuring that the inferencing process is marginal. You don’t want any inference unnecessarily repeated. The user suggests Google Sheets has no ability to remember what’s been processed.

I recently built an app script for google sheets and noticed that by default Google Sheets won’t cache results. So it very frequently hits the OpenAI API endpoint for each cell containing your formula. I found a way to reduce the frequency of the app script refresh but I have not found a way to stop it completely once you’ve gotten one successful response per cell.

This fact is easily overcome by ensuring the process looks only at rows that haven’t been processed. Obvious, right?

Takeaway

Invariably, good AI software is 80% non-AI stuff.